Memory is subjective and colored by experience. Ask someone to narrate their day, and they will tell you a story organized by events they found personally significant: an argument they had, a delicious meal they ate, or a decision they made. Same goes for their year, their childhood, or their last job. We respond to meaningfulness as a driver of memory storage and navigation, resulting in tremendous variations that pose challenges for scientific study.

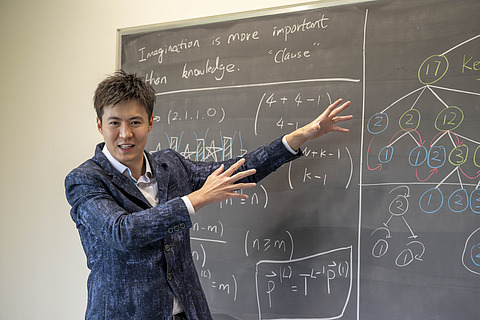

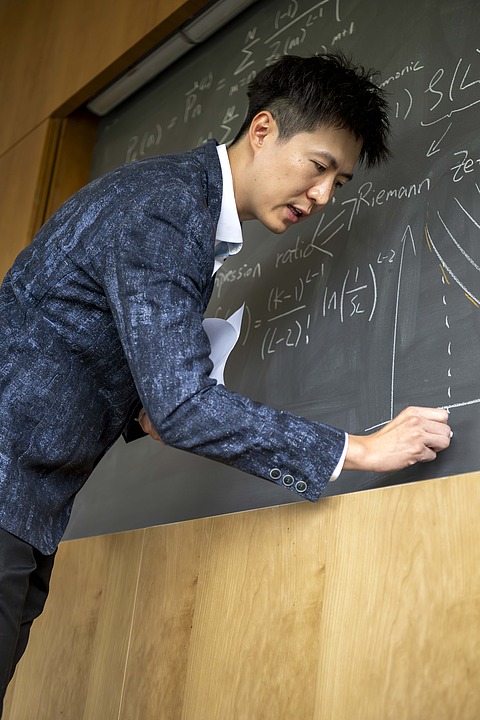

Weishun Zhong, Eric and Wendy Schmidt Member in Biology in the School of Natural Sciences, and Misha Tsodyks, C. V. Starr Professor in the School, have led a team of IAS scholars to a surprising discovery about the individualized content and organization of our memories: they are united by a common mathematical structure.

In a study investigating how people recall stories, the team discovered a general law that governs the way we store and compress meaningful memories. Although memories align with an individualized sense of what matters, our brains use a common architecture to organize our recollections, nesting information in a hierarchal structure that helps us navigate them efficiently.

Even more striking is the team’s finding that these hierarchal structures give rise to a universal pattern in the way people encode and communicate what they remember. For the first time, scientists are offering evidence that humans compress information into a limited number of “building blocks” that represent detailed stories.

These units of information are capacious, able to summarize ever greater swaths of information as a narrative grows. But no matter how long or short the original story—whether it is War and Peace or a quick jolt of horror from Stephen King—people will still use the same finite set of units to relay their memory of the narrative.

In addition to Zhong and Tsodyks, Tankut Can, Starr Foundation Member in Biology (2021–24); Antonis Georgiou, Charles L. Brown Member in Biology and Martin A. and Helen Chooljian Member in Biology (2024–26); Ilya Shnayderman of the Weizmann Institute of Science; and Mikhail Katkov, frequent Visitor in the School of Natural Sciences, all contributed substantially to the paper, “Random Tree Model of Meaningful Memory,” which has been published in Physical Review Letters.

Physics meets neuroscience

Zhong is a physicist. A specialist in statistical mechanics, he applies methods originating in the probabilistic explanation of complex physical systems to neuroscience.

Where others with his training might explore the movement of atoms in novel materials or study the interaction between proteins and cells, Zhong creates mathematical models of cognition and intelligence.

His field has an odd-couple quality. Physics is known for its relentless simplification, while neuroscience embraces complexity to understand the human mind.

“Physics often relies on simplified models that allow us to predict typical behaviors,” Zhong said. “A high school physics class might focus, for example, on problems asking questions about a ball that’s traveling over a completely flat surface with zero wind.”

These models are sometimes derided as “spherical cows.” There’s an old joke where a farmer assembles a team of scientists to solve a milk production problem. The physicist returns to the farm first with a solution, confident it will work—as long as the cow is perfectly spherical and contained within a vacuum.

“There’s a similar joke in our field,” Zhong said. “A physicist asks his friend, a neuroscientist, what he studies. The neuroscientist says, ‘Here’s a brain. It has two sides,’ and the physicist interrupts. ‘Back up! Too complicated!’”

“But the real world is hopelessly complicated,” Zhong added. “Our simplifying methods create necessary tools to understand and explore this complexity. At the same time, the messiness, beauty, and mystery of the brain motivates us to creatively improve these methods. There’s benefit in both directions.”

Hierarchy, compression, abstraction

Zhong’s team used statistical methods to create an elegant model that represents our brain’s storage and retrieval of narrative memories.

This model, described as a “random tree ensemble,” highlights above all the efficiency of our biology. Our working memory—the system our brains use to hold, sort, and retrieve information for active processing—is, by nature, limited. The model displays how our brains optimize for this limitation, using hierarchical networks that cascade downward in a tree-like formation to nest details inside meaningful events.

To recall a story or a series of autobiographical events, we travel down this tree, which accounts for the complex nature of narrative memory.

What’s most significant about the study is its ability to quantify how our memories compress and abstract narratives. This aspect of analysis has been a sticking point in scientific research.

“Until now, quantitative studies of memory have relied on non-meaningful material,” Zhong explained. “Researchers use lists of random words. A word-for-word, yes-or-no version of recall is easy to quantify. But this isn’t how real memory works! We remember our days, our weeks, and our lives as stories, and we tell these stories to each other. We neither remember narratives verbatim, nor do we all use the same rigid schematic organizations to communicate. Rather, we think and communicate the meaningful information using paraphrases, summaries, and abstraction.”

The question the team faced, then, was how they would account for this kind of abstraction and variation in a rigorous, quantitative way.

“There’s no such thing as summarization in physics,” Zhong emphasized.

To explore this more true-to-life aspect of memory, the team, led by Georgiou and Can, created an experiment which was published earlier this year in Learning and Memory.

1,100 participants received one of 11 emotionally resonant stories from influential sociolinguist William Labov’s The Language of Life and Death. Researchers gathered and analyzed subjects’ narrative recollections, which typically ranged from 10–30 written clauses each.

“This is where AI comes in,” Zhong said. “With this kind of large-scale data, we can now use a Large Language Model (LLM) to match the participant’s recollections with the original text.”

The IAS team modeled subjects’ recollections using linguistic clauses as fundamental building blocks (similar to how physics models the collective behavior of atoms) and analyzed their structural organization statistically.

The resulting model shows that the average length of our recall of a story does not increase in proportion with the length of the story itself. Instead, as a story becomes longer and more detailed, our brains compress the narrative into resourceful summaries.

The model also makes an important new prediction: as narratives grow longer, a universal, scale-invariant limit emerges.

“Whether we give people a novel or a short story, the average distribution of their summarization and compression levels would be exactly the same,” Zhong explained. “The chance a clause from the recall will summarize 10% of the story is independent of the story’s length, for example. Our brains seem to have evolved to consolidate information in this universal way to make the most of our physiology’s advantages and limitations.”

A simple model with a promising future

“We give people these narratives, and everyone gets something different from them because of their individual experiences,” said Misha Tsodyks. “But, when we statistically analyze enough of these recollections, we see fascinating general trends. For me, the biggest takeaway is that we were able to find such a simple model for such a complex phenomenon.”

The applications are as interdisciplinary as the theory’s origins.

The mechanism the team discovered can now be further investigated by researchers across many fields in neuroscience and psychology, and biomedical researchers may seek to physiologically verify it in the brain. Additionally, the theory may prove useful for understanding and treating memory-related problems caused by injury, trauma, or disease.

A more immediate application can be envisioned in artificial intelligence and machine learning. The team’s model could inspire new neural network architectures for natural language processing that are more specialized and less resource-intensive than the ones we currently rely on. It may even help AI be more human-like in its outputs.

“Our interdisciplinarity and teamwork were key to the success of this project,” Zhong concluded. “I still get comments from physicists that my research isn’t ‘really’ physics. Some neuroscientists tell me the same about neuroscience. But the payoff speaks for itself. To me, physics is the mindset that things can be understood—across disciplines, across scales. The more open-minded and collaborative we can be, the better our work becomes.”