The Computer Scientist Who Shrinks Big Data

For Jelani Nelson, algorithms represent a wide-open playground. “The design space is just so broad that it’s fun to see what you can come up with,” he said.

Yet the algorithms Nelson devises obey real-world constraints — chief among them the fact that computers cannot store unlimited amounts of data. This poses a challenge for companies like Google and Facebook, which have vast amounts of information streaming into their servers every minute. They’d like to quickly extract patterns in that data without having to remember it all in real time.

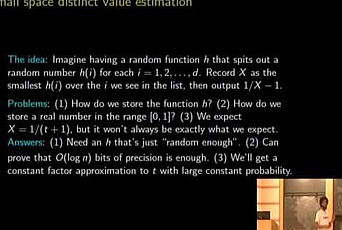

Nelson, 36, a computer scientist at the University of California, Berkeley, expands the theoretical possibilities for low-memory streaming algorithms. He’s discovered the best procedures for answering on-the-fly questions like “How many different users are there?” (known as the distinct elements problem) and “What are the trending search terms right now?” (the frequent items problem).

Nelson’s algorithms often use a technique called sketching, which compresses big data sets into smaller components that can be stored using less memory and analyzed quickly.