Global Health Interventions in Africa

Historical epidemiologists are beginning to explore the documentary record of interventions in tropical Africa to prevent the transmission of infectious diseases and reduce their prevalence. Some interventions against individual diseases began in the late nineteenth- and twentieth-century era of colonialism, when Europeans founded research institutes to investigate the challenges of tropical diseases and deployed their new medical knowledge in mobile campaigns to treat sleeping sickness, tuberculosis, yaws, yellow fever, onchocerciasis, and other diseases. In the aftermath of the Second World War, the newly founded World Health Organization (WHO) provided expert advice to colonial and later independent African governments and encouraged a more global approach to disease control. In the late twentieth century, international and bilateral organizations, philanthropic organizations, nongovernmental organizations, and public-private partnerships in league with African governments have undertaken initiatives to control diseases and to shape health systems.

The subdiscipline of historical epidemiology, located at the intersection of medical history, epidemiology, and other allied public health disciplines, is in development. The field is multidisciplinary. It requires the integration of different kinds of scientific knowledge and an understanding of changing social, political, economic, and epidemiological contexts. Its premise is that an understanding of past global health interventions can contribute to an improvement in the design and implementation of future interventions.

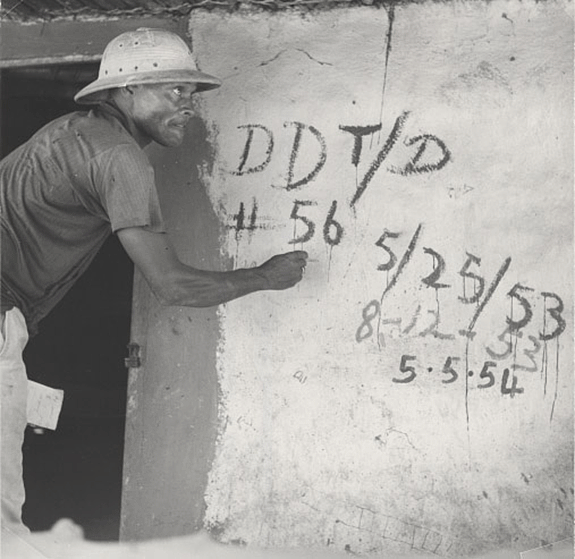

The long struggle against malaria in tropical Africa is instructive. It began to be waged aggressively in the immediate aftermath of the Second World War, when enthusiasm for the public health benefits of synthetic insecticides such as DDT ran high. In 1945, the United States Public Health Service sent a team to Liberia that was charged with the control of the mosquito vectors that transmitted malaria in the capital of Monrovia. They sprayed the houses in Monrovia with DDT and achieved a marked reduction in severe malaria. The program was then extended into the hinterland, into the same areas in which the recent Ebola outbreak took place. There, too, they achieved good results. In line with the WHO’s 1955 decision to launch its Malaria Eradication Program, the Americans sprayed DDT and other residual insecticides to remarkable effect. They reduced malaria transmission to low levels and kept it at low levels for several years. This marked the first large-scale use of synthetic insecticides to control malaria in tropical Africa.

The years of success in malaria control came at a price. The Liberians in the project zones had lost most of their acquired immunity, and when the insecticide spraying stopped, malaria resurged with a vengeance. It struck adults more severely than before the intervention. The Liberian projects were part of a larger mosaic of malaria control and eradication projects that were launched before and during the WHO’s Malaria Eradication Program, largely financed by the United States government.

In the twenty-first century, global health organizations, led by the Gates Foundation, made a new commitment to the global eradication of malaria. This commitment was based upon an incomplete assessment of past experiences with malaria control and eradication. The biomedical and global health communities were broadly unaware, for example, that the first global malaria eradication program had carried out extensive pilot projects in tropical Africa in an effort to develop successful eradication protocols.

As a result, some experiences were repeated. Twenty-first-century malaria eradication programs deployed DDT and other synthetic insecticides in the same West African regions where their predecessors had used a similar array of insecticides. The synthetic insecticides once again produced resistance in the mosquito vectors.

The grand shift in malaria interventions toward the use of synthetic insecticides during the World Health Organization’s Malaria Eradiation Program took place on a global scale, and it displaced one of the older approaches to malaria control that had been based on chemical therapy. In the 1930s, in the Belgian Congo, for example, it was recommended clinical practice to treat an African child with quinine regardless of whether or not the child had malarial symptoms. The colonial era physicians did not know how this treatment worked, but it reportedly reduced childhood mortality by 50 percent among those who received the treatment. This practice was forgotten during the grand paradigm shift. Countless lives were lost as a consequence. Indeed, it was not until the late twentieth-century discovery of the efficacy of intermittent preventive malaria therapy with synthetic malaria drugs for pregnant women and, in later decades, for infants and children, that this practice became part of contemporary therapeutic practice.

Other historical evidence has proven cautionary. In 2013, the global health planners for a major intervention involving mass drug administration using a single dose of primaquine became aware, quite by happenstance, that a single dose of the drug had been used in several earlier malaria control projects in tropical Africa. This was potentially important because a second therapeutic treatment with primaquine could be toxic for individuals who carry a genetic mutation known as G6PD, the most common enzyme deficiency in human beings, affecting about 400 million people.

Other types of historical records have also proved to be directly relevant to the contemporary malaria eradication campaign. During the Cultural Revolution in China, Mao Zedung ordered researchers to find a cure for malaria in the Chinese pharmacopoeia. The pharmaceutical chemist Tu Youyou discovered a reference to the plant Artemisia annua in a Chinese medical text from the first millennium C.E. She isolated the active antimalarial compound, artemisinin, that became the frontline antimalarial drug. Tu Youyou received the 2015 Nobel Prize in medicine for this important discovery that has saved millions of lives.

Historical epidemiology holds the promise of developing as a fundamental resource for global health. Like some other new fields, it is emerging on the cusp of existing disciplines. One practical order of business will be for doctoral programs in history to facilitate disciplinary training in the public health sciences at the master’s level. Another order of business will be for departments of epidemiology and global health to open their doors to historical epidemiologists and broaden their curricula to expose master’s degree candidates to this field.

Beyond the academy, the philanthropic organizations, nongovernmental organizations, and other global health actors must be encouraged to improve the historical conservation of global health intervention data. Members of the global health community—and the individual states with responsibilities for their populations’ public health—need to collect and preserve records of what has happened in the course of their programs, even if these records include failures, problems, obstacles, and glitches. At present, this is made difficult by the donor culture of program evaluation that exclusively valorizes positive data. It is time to appreciate that there is a great deal to be learned from the past that can improve future interventions.

There are positive developments afoot. The World Health Organization and the Rockefeller Foundation have embarked upon large-scale digitization projects to make their historical records accessible to researchers, and they have made fine progress to date. Most other major global health actors have yet to make such commitments. There is an enormous amount of unpublished “grey literature” of project evaluations and assessments by nongovernmental organizations and national governments that is currently inaccessible.

The emerging field of the historical epidemiology of contemporary disease has the potential to integrate a number of disparate fields of knowledge and to improve the practice of global health. In a generation from now, it is likely that historical epidemiology will be fully integrated into the field of global health. Its logical imperative is straightforward. Ignorance of the epidemiological past precludes its lessons from being learned and creates unnecessary vulnerabilities for the global health enterprise.